History

1. How do computers read the alphabet?

2. The background, in short

3. All letters in the alphabet have a number — but where do those numbers come from?

In morse:

._.. _ _ _ . _ _= Low

In morse:. _ . . _ _ _ . _ _= Enemy

The history of computer science is beautiful.

When I first discovered how the ASCII standard was invented, it was truly an "aha!" moment.

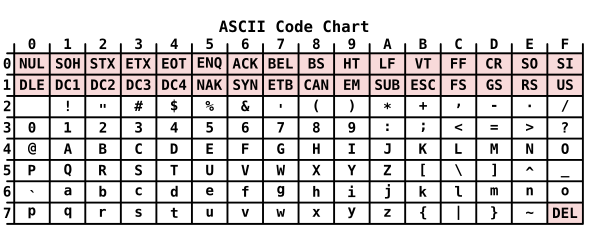

Have you seen the ASCII table?

Each character has its own numeric value, usually shown in decimal form.

But how did we come up with these numbers? They can’t just appear from outer space, right?

This is where history comes in.

Have you heard of Morse code? I assume you have — but what does it have to do with ASCII?

Watch this short video on Morse code to get a feel for how it works. Then come back here, and let’s wrap it all together with ASCII. https://www.youtube.com/watch?v=xsDk5_bktFo

"Hotel... 4 dits, like a galloping horse in a hurry..."

It’s beautiful how we humans invented a system to communicate using a single tone over electricity, one letter at a time.

So, how does ASCII relate to all this?

Well, the roots go back quite a bit — but ASCII as we know it was invented at Bell Labs in the early 1960s.

It was designed to improve how teleprinters communicated, and it introduced a 7-bit format to encode characters.

Interestingly, while ASCII became the official internet standard in 2015, it’s been around for decades before that — quietly powering much of computing history.